GitHub - Ishank56/Insurance_chatbot

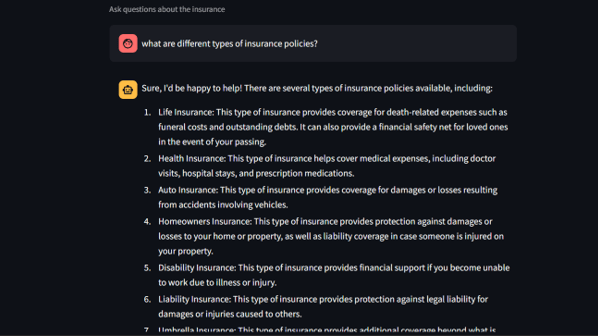

Developed a conversational AI chatbot answering questions from an insurance handbook PDF (Insurance_Handbook_20103.pdf) using local tools. The objective was a functional, cost-effective chatbot leveraging Ollama (for llama2:7b LLM), ChromaDB (for vector search), and Streamlit (for UI). The methodology used Retrieval-Augmented Generation (RAG): parsing the PDF into paragraph chunks, embedding them in ChromaDB, performing semantic search for relevant context, and prompting the local LLM. Key features include conversational memory, streaming responses, a stop-generation button, and basic escalation triggers. Local execution prioritized cost and privacy but highlighted the trade-off between context relevance (n_results) and LLM speed, heavily dependent on hardware. The final prompt engineering focused on an active voice and preventing source citations.

16 Feb 2025