The Invisible Backbone of AI: Showcasing Data Labeling Projects in Your Portfolio

Team Fueler

25 Aug, 2025

Within the dazzling sphere of artificial intelligence, where sleek ML demos steal the limelight and autonomous vehicles sweep award ceremonies, another team quietly exercises the discipline that animates every breakthrough. Data-labeling professionals—those who annotate, curate, and validate the very information machines learn from—are the overlooked backbone, tirelessly converting unstructured noise into polished gold that fuels the algorithms making headlines. Still, the very specialists helping those algorithms excel often find it difficult to feature their achievements in any online or offline portfolio.

The difficulty is entirely rational. A polished product page, an interactive graph, or a motion-study video can dazzle in seconds; a well-organized spreadsheet with thousands of row-colouirement and occasional shading shades is instantly labelled ‘boring’ by the untrained gaze. How, then, can anyone graph the dexterity of marking 12 distinct anomalies in a single thoracic MRI slice or track linguistic cues and culture-specific irony in a dozen variant of the same sentence? Clarity arises from reframing the work: every annotation transforms vague bulk into authoritative context, every screen transformation is an exercise in domain mastery, quality governance, and foresighted design, creating the information the algorithms will one day trace.

Understanding the True Value of Data Labeling Work

Data labeling is the bedrock upon which artificial-intelligence models learn to perceive and navigate the world. Every autonomous car that threads through urban grids draws on traffic scenarios that have been meticulously annotated. Each streaming algorithm proposing your next binge-worthy title leans on the user-preference dataset that was painstakingly tagged by human eyes. The advanced language models that now mediate so much of our daily conversation relied upon corpora in which domain experts devoted thousands of hours fine-tuning every detail to maintain accuracy and resonance.

Too frequently labeled as mindless, transactional clicks, the work of a data annotator is a craft that requires a striking blend of discipline and discernment. Labeling experts must grasp each project’s unique objectives, apply intricate and often subtle guidelines with absolute uniformity, and decide intelligently in the ambiguous territory that lies beyond the norm. The most proficient taggers notice minute discrepancies that, to the untrained gaze, seem trivial but, once accumulated in millions of examples, would warp model behavior. They see the contours of potential algorithmic bias and flag toxic or misleading data long before the neural net is fed.

Current data labeling initiatives are taking on projects of such specificity that only dedicated subject-matter knowledge will yield quality results. In medical imaging, annotators must comprehend not only traditional anatomy but also variant pathology appearances in multiple imaging modalities. Legal document classification projects, meanwhile, hinge on accurate tagging of clauses that reference arcane statutory language, fast-changing precedents, and jurisdictional subtleties. Social media sentiment tasks demand comfort with wholly local dialects, historical meme pass judgments, and syntax that shifts on a daily basis. These demands elevate labeling from a repetitive mechanical step into a selective apprenticeship within domain expertise.

Effective portfolios, in turn, turn conventional metrics inside out. Do not count instances. Do the arithmetic in your listener’s timeline. First, frame the dataset and the friction it presented. Talk through a novel templating algorithm that took annotators from fourteen minutes to seven per frame because it guided eye movements and narrative recall. Then, pivot to the residual turn: show how pre-annotated grids surfaced rare but data- poisoning misidentifications, a petty ratio that, unchecked, would have de-funded a two-year hypothesis. The degree to which observation and intervention arrive in that reversed reckoning speaks to executive acuity, protein after protein.

Last, and crucially, layer the delivery around audience rather than region. Technical reviewers covet the maths of the circular gold iced, the Jacquet points of overlap, the pooling iterations that busted variance. Senior line buyers, though, seek a batt lantern: how your dataset catalyzed awaited pilot healing, how your anecdotal urgency transformed a pigeon dataset into a functional MVP lifecycle. The strongest narratives align those loci around metrics that the lead time and feed window have already reconcil.

Visual artifacts reshape how viewers appreciate your data labeling impact. Pair raw samples with your marked-up outputs in side-by-side panels so audiences instantly grasp the change your work effect. Supplement these with flowcharts that outline your review loops and consistency checks; the diagrams will quietly narrate the rigor that underlies each batch. When you plot accuracy trends and cycle-time declines on simple line graphs, the conversational, persuasive appeal stays, and ROI discussions become unnecessary. Turn abstract, heroic language into tangible, repeatable behavior, and your contributions endure in memory.

Whether using Labelbox, Scale AI, or in-house UIs, each keystroke on the annotation canvas grows from backstage appliance: keyboard shortcuts, custom mask libraries, review filters, and latency management proxies. Command over statistical sampling techniques and tiered review scales brings structure to volume. When model drift or annotation fatigue threatens, your low-level understanding of model complexity transforms panic into log taxonomies and corrective sampling dials. Milliseconds saved on batch processing slopes land only on the shoulders of that suppressed baseline of domain literacy.

Your breadth of data modalities further entrenches your authority. Distilling object-delimiting masks over overlapping boxes for dense aerial images speaks different language and rhythm from the composite assembly that flows into a conversational agent’s context window. When labels must avoid demographic stereotypes and confirm social nuance, the scaffolding must be linguistic and cultural, not just GUI. GSIs for graph data, slicing values, and moving points into corrective buckets add another inventory of expertise; the domain of variance and reward calculations asks not just for graphs but for lived work on the canvas you watch.

Quality assurance is often the unsung hero of enterprise-grade data annotation. Designing a quality framework, specifying inter-annotator reliability thresholds, and harmonizing labeling expectations across millions of samples mandate project management rigor, analytical foresight, and a relentless commitment to detail—skills that go beyond routine labeling proficiency. Organizations like oworkers, which provide specialized data labeling services, differentiate themselves by embedding these disciplines into every project, ensuring that systematic quality protocols outlive the noise of any single assignment.

Elevating the Conversation with Evidence and Influence

When metrics take center stage, subjective impressions yield to data-driven demonstrations of annotation expertise. Accuracy curves, inter-annotator kappa statistics, throughput benchmarks—these are the artifacts that convert workflow anecdotes into measurable professional value. Ideal is linking metric improvement to downstream impact, showing that a refinement in labeling speed corresponds to a measurable drop in downstream model F1 score, or tighter labeling precision translates to fewer production rollbacks and faster ROI.

Yet the reality of proprietary or aggregated reporting often occludes direct attribution. Therefore, proactive record-keeping becomes your strategic advantage. Capture quality benchmarks, throughput spikes, and methodological refinements in real-time. Document edge cases and the micro-protocols that resolved them. Such contemporaneous artifacts, originally regarded as tactical noise, crystallize into narrative armor when reconstructing achievement narratives a year later, lending the portfolio the continuity and authenticity that retrospective recollections invariably lack.

Instead of just tallying projects on a CV, develop rich, step-by-step case studies that carry viewers from kickoff to closeout. Map the data hurdles you faced, outline the cues that shaped your annotation style, summarize the triple-check you employ to guarantee uniform standards, and quantify the lift in model accuracy after your work. Walk readers through your thinking at each junction so that your rationale becomes the thread of each success, not just the static results.

When prospective clients review areas of improvement, the first point often raised is confidentiality. True, the most valuable projects live in proprietary or sensitive datasets that cannot, by policy, see the light of public demonstrations. Counter by constructing surrogate cases: a tab of synthetic numbers that follow the same distribution patterns, a domain story that flags the same annotation puzzles but uses harmless names, or a de-identified stratum that leaks no fields but shows how your logic scales. The goal is to exhibit flow, context, and nuance without risking real secrets.

Beyond the data itself, the field suffers from a stereotype that pits labeling work against strategic vision. Refute it by elevating your narrative: Illustrate how a rare edge case first stalled model convergence, how you and a laser-focused annotation guild coded it mutually and mined the latent menace, and how the resulting consensus deleted the lane from the error matrix. Highlight each tweak in workflow, each non-linear notebook step that became a reusable rule, and the non-obvious patterns you reported that informed downstream training schedules; frame yourself as a cold- eyed data archaeologist, not a simple clipboard operator.

When large collaborative projects overshadow individual work, scale and impact can feel abstract. To clarify your influence, center your own roles and leadership. Quantify metrics tied strictly to your contributions, like time savings, error reductions, or throughput gains directly attributable to your actions. Spotlight moments where your technical judgment tilted project strategy or final metrics—peace keeping tied to missed deadlines, for example. If you mentored other labelers or crafted pivotal annotation protocols, those activities merit headline status, not footnotes.

Constructing a Compelling Professional Narrative

The most persuasive labeling portfolios fuse discrete projects into a trajectory of professional growth. Plot your journey, moving from straightforward labeling to intricate, domain-specific challenges. Show how successive projects refined the same skill—perhaps scaling bounding-box density on structures and same, or moving from diabetes image labeling to pathological image labeling. Each project, framed as a developmental milestone, illustrates how your expertise matured and forecasts how quickly you can adapt to the next to work.

Arrange your portfolio thematically—by the types of data you confront, the verticals you serve, or the technical barriers. For example, you might divide the sections into text, image, video, audio, and then nest them under healthcare, e-commerce, and automotive. Under each data or domain header, flag the relevant technical hurdles—multilingual captions, high-noise audio, border-region images. Doing so allows clients or employers to quickly identify relevant experience and to read your range of work as systematic rather than haphazard.

Deliberate professional development activities substantially reinforce your portfolio narrative by providing clear evidence of your dedication to ongoing learning. When you add certifications in in-demand technologies, attend key industry conferences, or meaningfully contribute to crowdsourced data labeling projects, you demonstrate an active investment in both your own growth and the vitality of the wider professional community. Each of these efforts also widens your network and offers early exposure to emerging trends and well-vetted best practices.

The data labeling domain is being reshaped every day by advancing automation, as tools grow ever more capable and the nuance required by modern AI models escalates. Sustainability in the field when circumstances shift depends on the ability to perceive these junctures and to assert oneself in the narrow corridor where human acumen still outperforms any machine, while also mastering the tools engineered to multiply human speed and precision. Anticipate where the human touch is vital, and keep learning applications that magnify your expertise.

As the landscape widens, promising peripheral sectors—media-alone mini-label curation, agent-suggested example acquisition, and tightly monitored human-on-loop AI training—open intriguing, highly practical opportunities. Cultivating skill and knowledge alongside these forward currents, while never letting the fundamentals of annotation competence fade, guarantees your continued professional significance and the ability to keep growing.

Often regarded as a quiet, unseen mechanism, expertise in data labeling is in fact the linchpin of any robust AI system and warrants both respect and visibility. When you present your data annotation skill to colleagues and decision-makers, you not only raise industry standards but also create a persuasive narrative for your own upward trajectory. AI’s trajectory is inextricably tied to the precision and nuance that trained humans impart, which is why well-honed labeling talent is now a rare and indispensable asset.

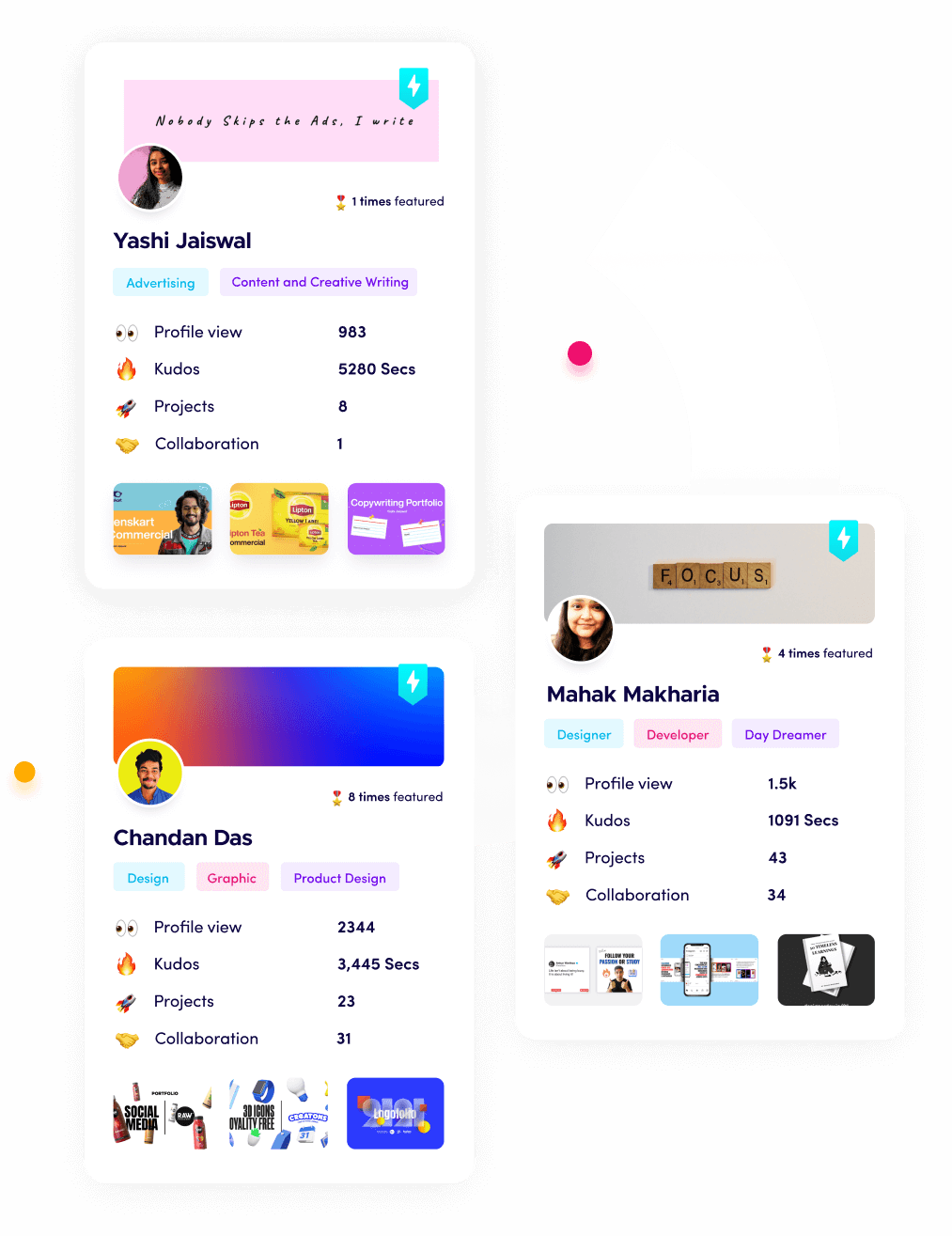

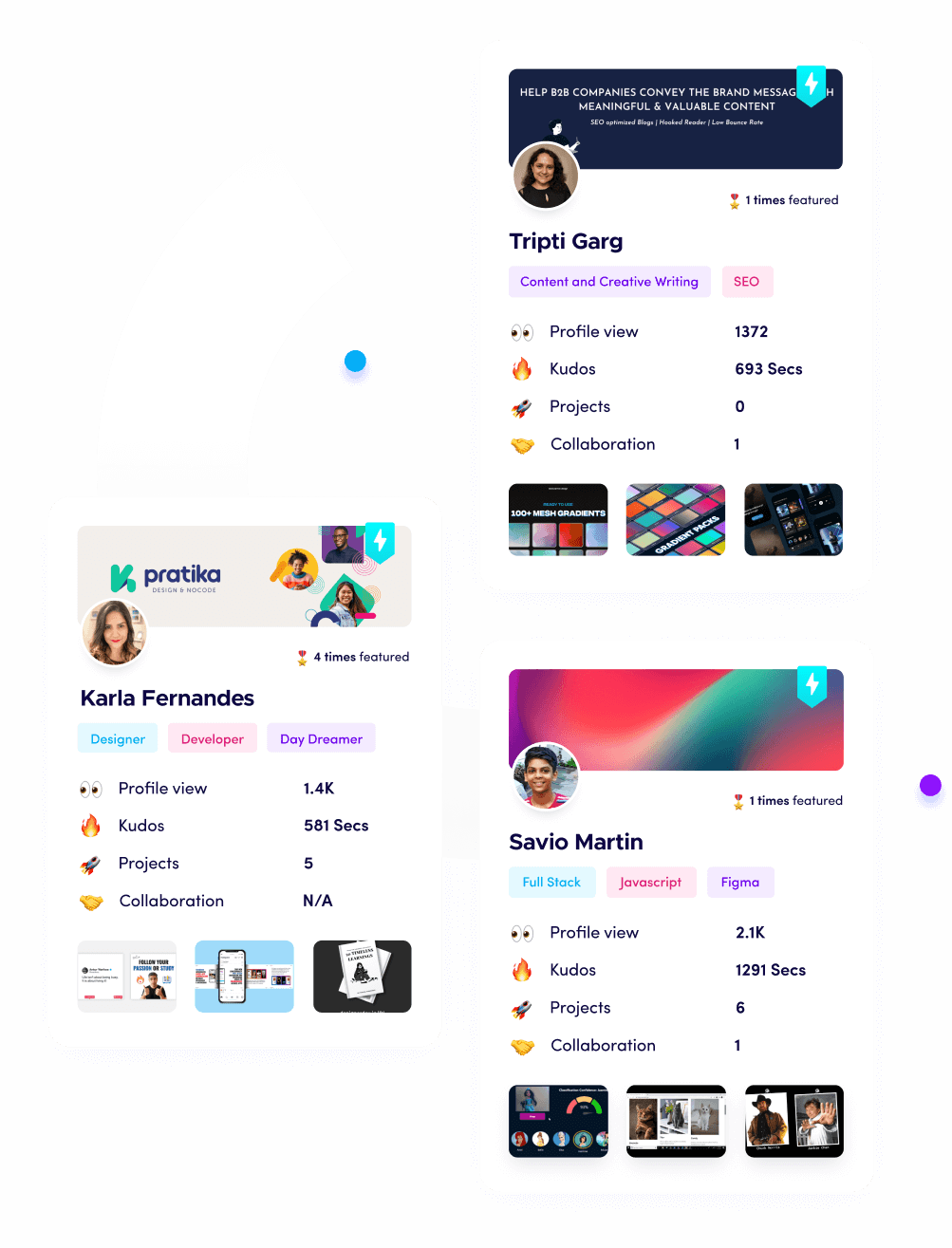

What is Fueler Portfolio?

Fueler is a career portfolio platform that helps companies find the best talents for their organization based on their proof of work.

You can create your portfolio on Fueler, thousands of freelancers around the world use Fueler to create their professional-looking portfolios and become financially independent. Discover inspiration for your portfolio

Sign up for free on Fueler or get in touch to learn more.