RunPod in 2026: Usage, Revenue, Valuation & Growth Statistics

Riten Debnath

19 Oct, 2025

RunPod has rapidly become a leading GPU cloud platform for AI developers seeking cost-effective, scalable, and globally accessible infrastructure. In 2026, its serverless GPU pods, autoscaling clusters, and multi-region presence have powered thousands of AI projects with unmatched convenience and affordability. But how does RunPod stack up in key usage and financial metrics amid fierce cloud competition?

I’m Riten, founder of Fueler, a platform that helps freelancers and professionals get hired through their work samples. In this article, I’ll walk you through RunPod’s usage, revenue, valuation, and growth statistics for 2026. Like a strong portfolio proves skill and credibility, these data points reveal why RunPod is a go-to platform for AI infrastructure.

What is RunPod?

RunPod is a GPU cloud platform designed specifically for AI and machine learning workloads. It offers on-demand, serverless GPU pods with multi-node autoscaling, which means developers can spin up powerful, distributed clusters without the usual infrastructure headaches. Supporting 30+ global regions, RunPod’s flexibility, affordability, and ease of use make it popular among micro-businesses, startups, and AI teams aiming to innovate rapidly while optimizing costs.

Key Features of RunPod (2026)

- Instant Serverless GPUs: Deploy GPU pods instantly, paying only when active, enabling frictionless AI training and inference without overhead costs.

- Autoscaling Clusters: Seamlessly scale from zero to thousands of GPUs per workload, meeting demand spikes efficiently and supporting bursting AI jobs affordably.

- Global Data Centers: Available in over 30 regions worldwide, RunPod ensures low-latency access and redundancy for distributed teams and applications.

- Flexible Pricing Models: Offers on-demand, savings plans, and discount spot pricing to optimize costs based on workload patterns and budget.

- Persistent Volumes & Agent Templates: Stores data between sessions and supports popular AI frameworks with ready-made deployment templates accelerating development.

Usage Statistics (2026)

- RunPod supports a community of over 100,000 active developers globally, with growing adoption in AI startups and research labs.

- Monthly GPU instance launches surpass 500,000, reflecting robust platform usage for AI model training and production inference.

- Average session duration across the web console and API is around 30 minutes, indicating significant user engagement and active task processing.

- The platform’s autoscaling clusters enable handling over 1,000+ inference requests per second for clients like Scatter Lab, reducing costs by up to 50% compared to traditional cloud providers.

- Mobile and remote access workflows account for approximately 35% of usage, supporting diverse work environments and global teams.

Revenue & Funding (2026)

- RunPod has raised $22 million in funding to date, including a $20 million Seed round co-led by Intel Capital and Dell Technologies Capital in 2024.

- The company’s estimated annual revenue reached approximately $10.7 million per year, with rapid yearly growth fueled by micro-business and startup adoption.

- Revenue is generated from hourly GPU usage fees ranging from $0.40 to $4.18 per hour depending on GPU types such as A100, H100, and RTX 4090.

- Flexible subscription and savings plans encourage sustained customer commitments, alongside cost-effective spot pricing for budget-conscious users.

- Strategic focus on optimizing user experience and expanding region coverage supports revenue scaling and platform stickiness.

Valuation & Growth Prospects (2026)

- While valuation is not publicly disclosed, RunPod’s continued funding success and rapid user growth position it as a promising mid-stage AI infrastructure startup.

- Market analysts expect valuation growth aligned with the global AI infrastructure sector, projected to reach tens of billions by 2030.

- Partnerships with major investors and AI ecosystem players boost RunPod’s competitive advantage and technology roadmap.

- Expansion plans into new data centers and enterprise-grade solutions indicate a trajectory toward comprehensive cloud provider status.

- RunPod’s agility and developer focus differentiate it in a market dominated by hyperscalers, opening growth opportunities in emerging AI applications.

Why it Matters: RunPod simplifies and democratizes access to powerful GPU infrastructure crucial for AI research and deployment. Its cost-effective, highly scalable platform enables startups and developers to experiment, build, and scale AI models without large upfront costs or infrastructure complexity. This accessibility accelerates AI innovation and adoption across industries, from health tech to autonomous vehicles.

Pricing (2026)

- GPU usage rates vary by GPU type: from $0.40/hour for entry-level GPUs (e.g., A4000) to $4.18/hour for flagship GPUs (e.g., H100 80GB).

- Savings plan discounts and spot instances can reduce costs by up to 50%, ideal for non-critical or burst workloads.

- No setup fees; pay-as-you-go pricing supports flexibility for micro-businesses and individual developers.

- Enterprise pricing offers custom packages with SLAs tailored to business needs.

- Persistent storage and multi-node cluster usage are priced based on specific resource consumption.

Why Fueler Helps

RunPod offers the infrastructure foundation to power AI projects, but showcasing those projects professionally matters to freelancers and developers. Fueler helps build portfolios from AI models, applications, and demos hosted on RunPod, creating credible proof of skills that attract clients and employers turning talent into opportunities.

Final Thoughts

RunPod’s growth story highlights the evolving landscape of AI infrastructure, where cost-efficiency, scalability, and global access are paramount. Its innovative serverless GPU pods, autoscaling, and developer-centric approach enable a diverse community to innovate faster and smarter. As AI adoption explodes, RunPod’s platform offers a flexible, affordable backbone for the next generation of AI creators.

FAQs

1. What types of GPUs does RunPod offer for AI workloads?

They provide GPUs from entry-level A4000 to high-end H100 80GB, catering to various AI model sizes and use cases.

2. How does RunPod compare cost-wise to big cloud providers?

RunPod can reduce costs by up to 50% using spot pricing and autoscaling, making it attractive for startups and research.

3. Can I scale GPU usage dynamically on RunPod?

Yes, autoscaling supports scaling GPU pods from zero to thousands, ideal for fluctuating AI workloads.

4. Is RunPod suitable for production AI deployments?

Absolutely, with SLA-backed enterprise solutions and global data centers ensuring performance and reliability.

5. What regions does RunPod cover?

RunPod offers GPUs across 30+ global locations for low latency and redundancy worldwide.

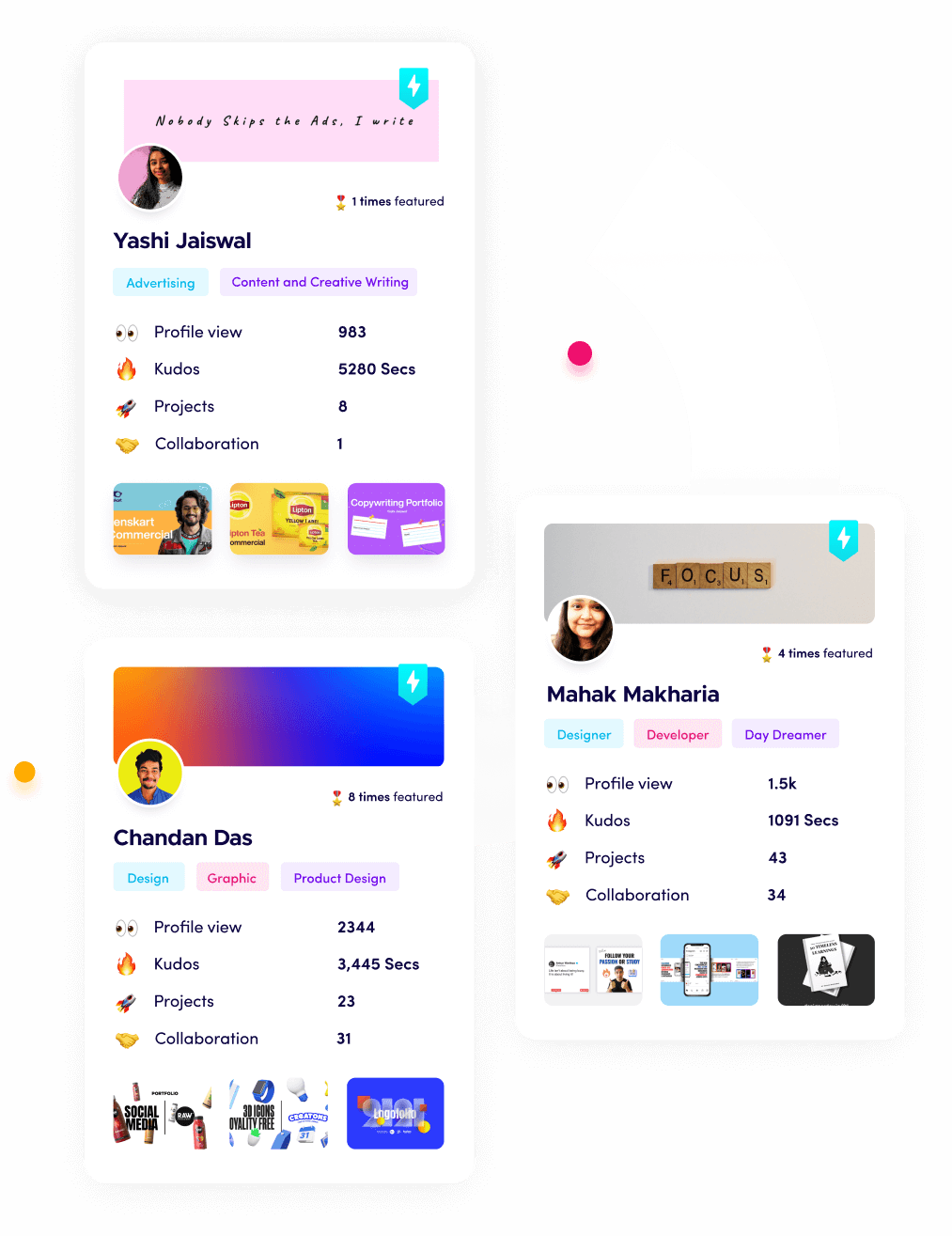

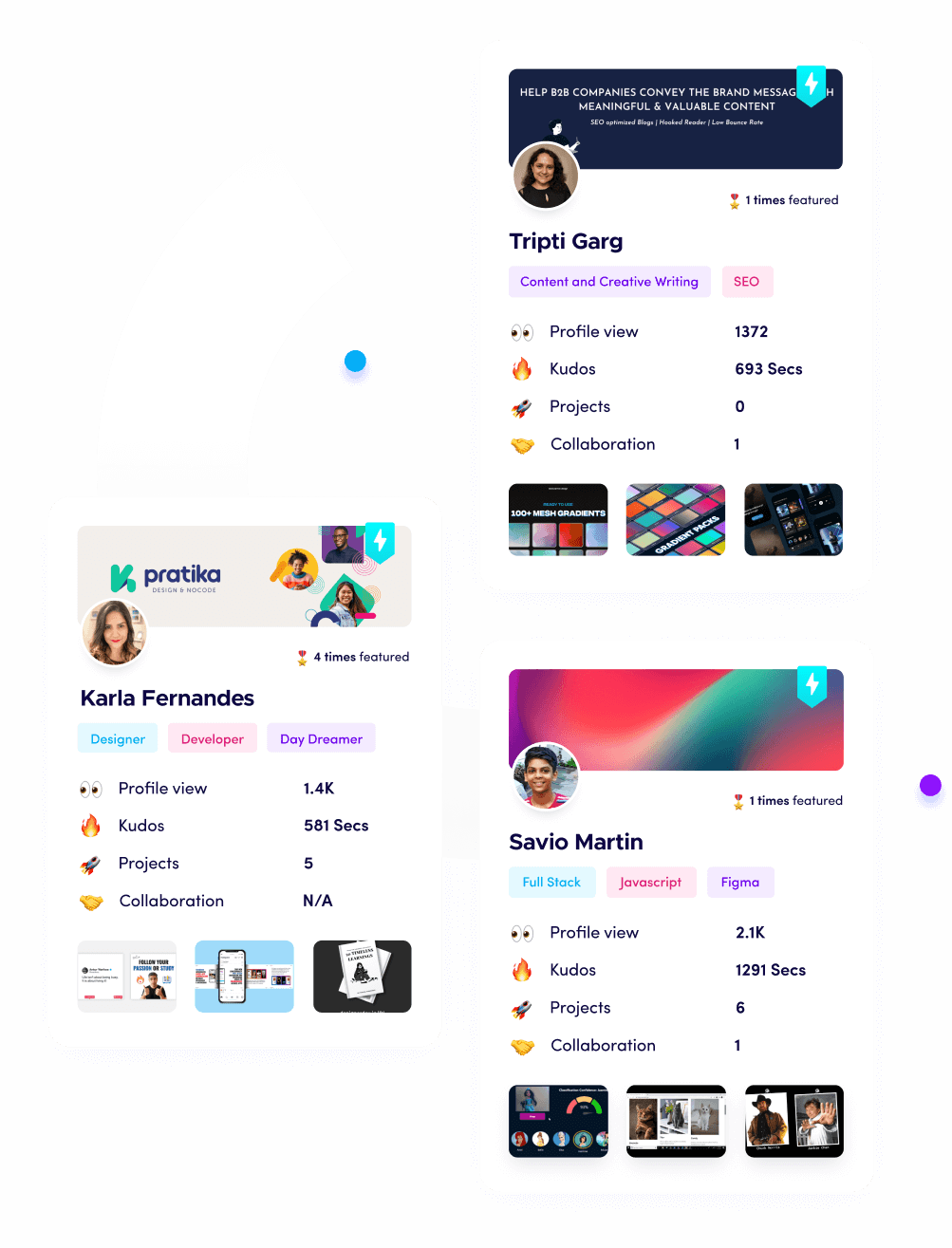

What is Fueler Portfolio?

Fueler is a career portfolio platform that helps companies find the best talent for their organization based on their proof of work. You can create your portfolio on Fueler, thousands of freelancers around the world use Fueler to create their professional-looking portfolios and become financially independent. Discover inspiration for your portfolio

Sign up for free on Fueler or get in touch to learn more.