How to Build Scalable AI Applications in 2026

Riten Debnath

11 Oct, 2025

Artificial Intelligence is evolving faster than ever, and businesses are racing to build AI applications that not only work but can also grow smoothly as demand increases. However, creating AI apps that handle increasing users, data, and complexity without slowing down or breaking is a significant challenge. Getting it right can make the difference between success and failure in 2026’s competitive tech market.

I’m Riten, founder of Fueler, a platform that helps freelancers and professionals get hired through their work samples. In this article, I’ve walked you through the most in-demand freelance skills for 2026. But beyond mastering skills, the key is presenting your work smartly. Your portfolio isn’t just a collection of projects, it is your proof of skill, your credibility, and your shortcut to trust.

Let’s explore the essential steps and tools you need to build AI applications that scale effectively in 2026.

Understand What Makes an AI Application Scalable

Building an AI application is more than just training a model; it’s about preparing infrastructure and design so the app can handle growth smoothly. Scalability means your AI application can serve more users or process more data without losing speed or accuracy.

Core elements of scalability to focus on:

- Efficient AI models that maintain performance with larger inputs

- Cloud infrastructure that can automatically allocate extra resources

- Flexible data pipelines that handle increasing data volume

- Robust APIs and microservices allowing easy expansion

Why it matters: Without planning for scalability, applications can crash or slow during peaks, frustrating users and losing business. In 2026, scalable AI apps are expected as a baseline, not an afterthought.

Choose the Right Architecture for Scalability

Architecture defines how the parts of your AI application communicate and work together. The right architecture supports smooth growth by isolating components to prevent bottlenecks.

Popular architectures include:

- Microservices: Breaking the AI system into small independent services for modularity

- Serverless compute: Using cloud functions that automatically scale based on demand

- Distributed computing: Spreading processing across many machines to balance the load

- Containerization (Docker, Kubernetes): Packaging code consistently and managing scalable environments

Why it matters: A scalable architecture simplifies updating, debugging, and expanding AI apps. Choosing the right pattern aligns your app’s growth with business needs and builds a strong foundation for future innovation.

Leverage Scalable Cloud Platforms and Infrastructure

Cloud platforms provide essential tools and services that let AI applications scale without worrying about owning physical servers.

Leading cloud platforms for AI in 2026:

- Amazon Web Services (AWS): Offers comprehensive AI and machine learning services with auto-scaling features

- Google Cloud Platform (GCP): Known for its AI research and TensorFlow integration capabilities

- Microsoft Azure: Strong in enterprise AI and hybrid cloud infrastructure

- IBM Cloud: Focused on AI and data privacy with Watson AI services

Why it matters: Cloud services handle scaling complexities, enabling developers to focus on models and applications, not infrastructure maintenance. This accelerates product launch and reliable growth.

Use Efficient Data Management and Streaming

Data is the fuel of AI, but as AI apps grow, managing and processing huge volumes efficiently becomes crucial.

Key data tools and methods:

- Data lakes and warehouses: Centralize diverse data sources while supporting scalability (e.g., Snowflake, Databricks)

- Real-time data streaming: Tools like Apache Kafka help process data instantly, allowing AI to react to live changes

- Data versioning: Managing dataset versions to improve reproducibility and model accuracy

- Automated data pipelines: Building scalable processes that prepare data without manual intervention

Why it matters: Good data management prevents bottlenecks and reduces the risk of using outdated or incorrect data, which ensures AI scales without sacrificing results.

Implement Robust MLOps for Automated Scalability

MLOps (Machine Learning Operations) automates the end-to-end lifecycle of AI models, from training and testing to deployment and monitoring. It is critical in ensuring AI apps scale professionally.

Core MLOps tools in 2026:

- MLflow: Open-source platform for managing ML experiments and reproducibility

- Kubeflow: Kubernetes-based system for scaling ML workloads on cloud or on-premises

- Weights & Biases (W&B): Tracks experiments and enhances collaboration for distributed teams

- AWS SageMaker: Offers comprehensive tools to build, train, and deploy models at scale

Why it matters: Without MLOps, scaling AI models manually becomes prone to errors and delays. Automating these processes keeps AI apps reliable and faster to update.

Optimize AI Models for Scalability

Model optimization is necessary to maintain reasonable response times and cost-effectiveness as AI apps grow.

Ways to optimize AI models:

- Model compression: Techniques like pruning or quantization reduce model size without major accuracy loss

- Knowledge distillation: Training smaller models that mimic larger models’ behavior for faster deployment

- Using efficient architectures: Models like MobileNet or EfficientNet built for speed and scale on low-power devices

- Dynamic batching and caching: Process multiple requests together and reuse predictions when possible

Why it matters: Scaling AI isn’t just about infrastructure; it’s about making models run efficiently on increasing user requests, saving computation time and cost.

Monitor and Maintain Scalable AI Applications Continuously

Building it once isn’t enough. Scalable AI applications require ongoing monitoring, feedback, and updates.

Key monitoring tools and practices:

- Performance tracking: Real-time dashboards to watch latency, throughput, and errors

- Drift detection: Alerts for changes in data patterns that degrade model accuracy

- Automated retraining: Systems that update AI models with fresh data to keep them current

- Security and compliance monitoring: Ensuring data privacy and protecting against attacks

Why it matters: Proactive maintenance avoids downtime, poor user experience, and costly failures, ensuring the AI application grows alongside user base and data.

Fueler: Showcase Your Scalable AI Projects Effectively

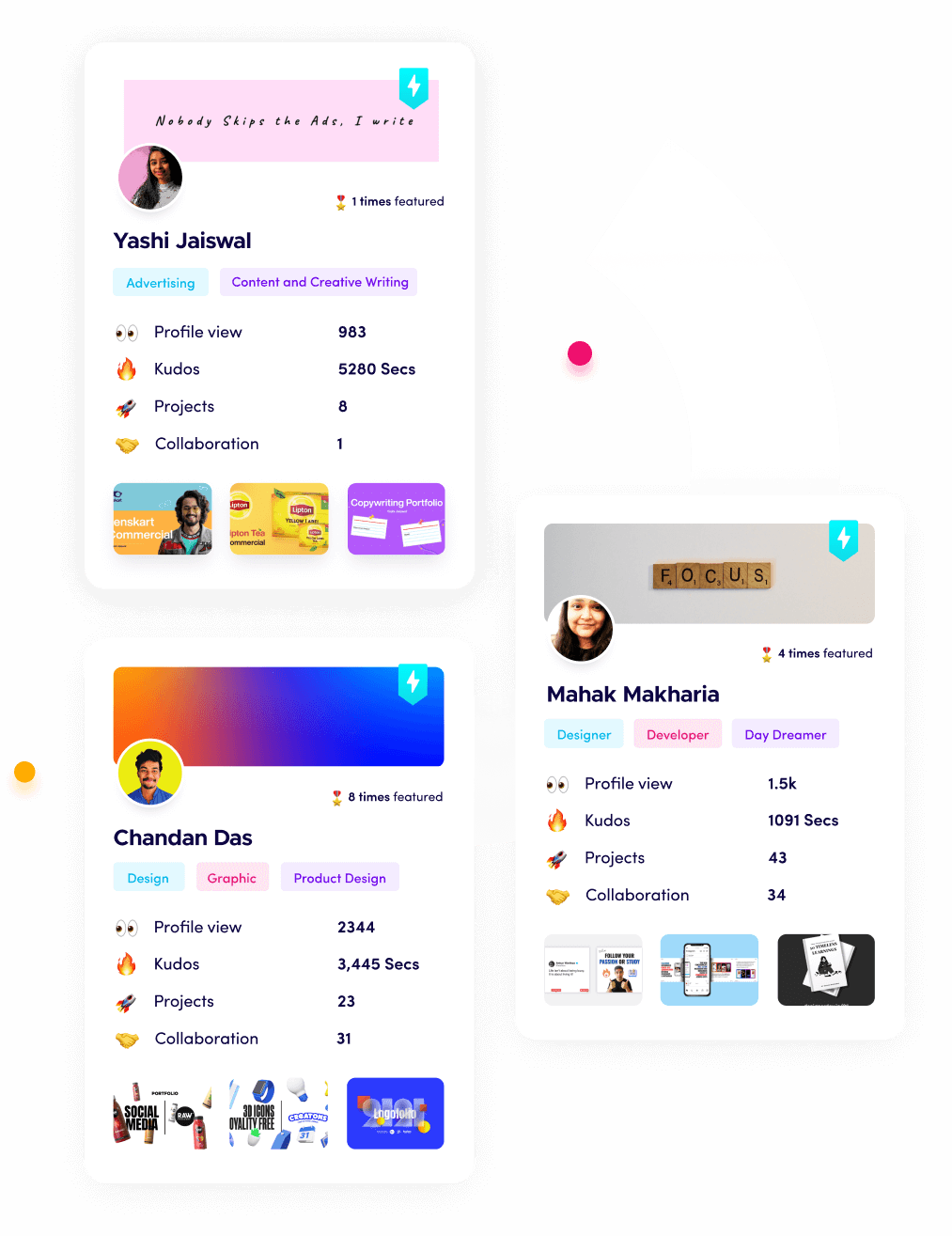

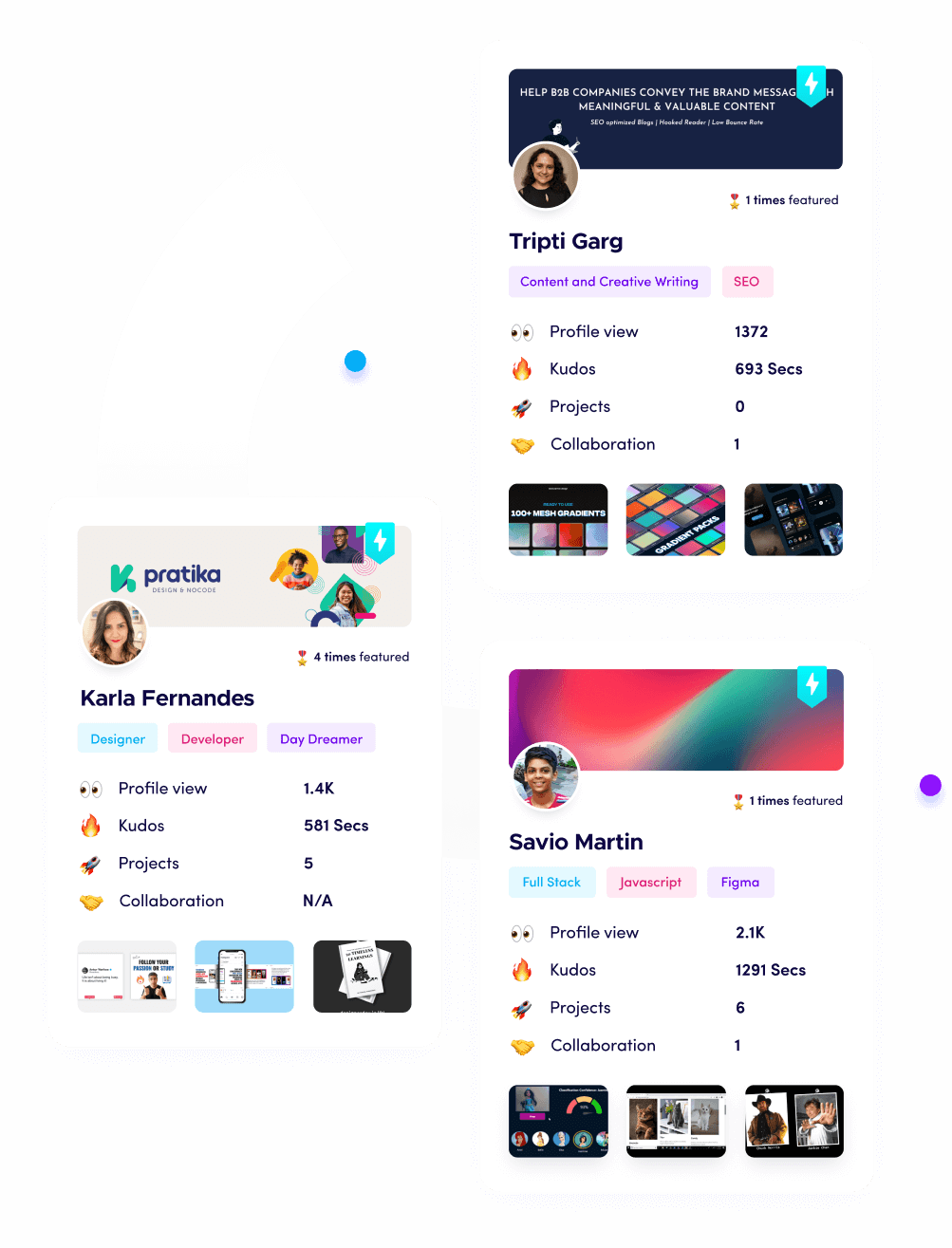

Building scalable AI applications requires not only technical skills but also the ability to present your work. This is where Fueler plays a crucial role. Fueler helps freelancers and professionals build portfolios where they can display detailed projects, assignments, and experiments showcasing their AI and scalability skills.

Using Fueler, you can prove your expertise by presenting actual work samples that demonstrate your ability to handle complex, scalable AI systems. This approach builds trust and attracts clients or employers faster in 2026's competitive market.

Final Thoughts

Building scalable AI applications in 2026 requires a clear understanding of architecture, infrastructure, data, and operations. Success comes from not only implementing efficient models but also designing systems ready to grow without loss of speed or accuracy. Additionally, sharing your work professionally through portfolios such as Fueler gives you an edge in the competitive freelance and job market. With these strategies, you are well equipped to build AI applications that stand the test of growth and time.

FAQs

1. What does it mean for an AI application to be scalable?

Scalability means an AI app can handle growing numbers of users or data without losing performance or accuracy.

2. Which cloud platforms are best for scalable AI in 2026?

Amazon Web Services, Google Cloud Platform, Microsoft Azure, and IBM Cloud are leading platforms offering scalable AI services.

3. How does MLOps help in scaling AI applications?

MLOps automates AI model lifecycle tasks like deployment, monitoring, and retraining which makes scaling fast and error-free.

4. What are some techniques to optimize AI models for scalability?

Model compression, knowledge distillation, efficient architectures, and dynamic batching help optimize AI for large-scale usage.

5. How can Fueler help AI developers in 2026?

Fueler enables professionals to showcase their work through portfolios with detailed projects and assignments, building credibility and attracting clients or employers.

What is Fueler Portfolio?

Fueler is a career portfolio platform that helps companies find the best talent for their organization based on their proof of work. You can create your portfolio on Fueler, thousands of freelancers around the world use Fueler to create their professional-looking portfolios and become financially independent. Discover inspiration for your portfolio

Sign up for free on Fueler or get in touch to learn more.