AI Model Deployment: Best Practices for 2026

Riten Debnath

11 Oct, 2025

We live in a time where Artificial Intelligence has left the laboratories and entered the heart of businesses, society, and daily life. In 2026, AI is shaping critical workflows from early cancer detection and autonomous supply chains to personalized digital education and voice-driven assistants. Millions of models are being trained every day. But here’s the truth: training a model is no longer the biggest challenge. Getting that model successfully deployed into the real world is what separates a research project from a business solution.

A model running in your Jupyter notebook is nothing more than an experiment. It only becomes valuable when it interacts with actual users, scales to thousands of requests per second, updates itself with changing data, and delivers consistently reliable results.

I’m Riten, founder of Fueler, a platform that helps freelancers and professionals get hired through their work samples. In this article, I’ll walk you through the best practices for deploying AI models in 2026. I will also show you why beyond technical expertise, presenting your work in a meaningful portfolio is critical. Your projects are not just code, they are proof of your capabilities and the fastest way to build trust with clients or employers.

What is AI Model Deployment in 2026?

To simplify, AI model deployment means taking a trained model and making it available for real-world use. This could be exposing it as an API endpoint, integrating it into a mobile app, embedding it into IoT devices, or running it as part of an enterprise microservice.

In 2026, deployment has evolved. It isn’t just about pushing a model to production anymore. It involves:

- Automating the pipeline from raw data to model training, validation, and release

- Monitoring models in real time for degradation, fairness, and performance

- Running models across diverse environments cloud, on-premises, and edge devices

- Managing compute costs, latency, and security risks at scale

An AI model today is expected to behave like a live product, not a static piece of code. That is why AI model deployment has become a discipline in itself, often managed by specialized teams called MLOps engineers.

1. Ensure Reproducibility Across Environments

A common frustration in AI projects is the “it worked on my laptop” problem. A model trained in a local environment might behave differently when moved to staging or production due to mismatched dependencies, library versions, or dataset inconsistencies.

Best Practices

- Containerize everything: Use tools like Docker or Podman to package the model, its dependencies, and runtime so it runs identically across environments.

- Track experiments: Tools like MLflow, Comet, or Weights & Biases help track hyperparameters, training data versions, and model metrics.

- Version control for models and data: Tools such as DVC (Data Version Control) ensure datasets and models are versioned just like code.

- Environment testing: Validate the model in dev, staging, and production with controlled sample data before releasing widely.

Why it matters: Reproducibility prevents unexpected failures during deployment. In high-stakes industries like banking or healthcare, even a minor model mismatch can cause regulatory issues, financial loss, or patient harm.

2. Design for Scalability from Day One

A model with 98% accuracy in the lab can still collapse in production if it isn’t designed to handle scale. Once hundreds of thousands of users start interacting with it, latency spikes, memory leaks, and bottlenecks emerge.

Best Practices

- Use serving frameworks: TensorFlow Serving, TorchServe, or NVIDIA Triton are purpose-built for scalable inference.

- Microservices architecture: Break down AI applications into smaller services that can scale independently. For instance, separating data preprocessing from inference.

- Orchestration with Kubernetes: Tools like Kubeflow run AI workloads on Kubernetes, enabling auto-scaling and resilience.

- Load testing: Simulate real-world user loads using tools like Locust or JMeter before actual release.

Why it matters: Scale is the difference between a prototype and a production-ready application. Without scalability, AI products risk losing user trust the moment adoption grows.

3. Implement Continuous Monitoring and Feedback

Unlike static software, AI models degrade over time. This is known as model drift. For example, a fraud detection model trained on last year’s data may fail to detect new fraud techniques today. Continuous monitoring allows you to detect drift early and retrain before performance crashes.

Best Practices

- Set up dashboards: Tools like Evidently AI, Arize AI, and WhyLabs let you monitor accuracy, precision, recall, and drift in real time.

- Track data quality: Monitor whether live data matches the distribution of training data. A sudden increase in anomalies often signals drift.

- Shadow deployments: Run new model versions in parallel with current ones to compare performance without exposing users to risk.

- Retrain pipelines: Automate retraining when performance dips below thresholds.

Why it matters: In 2026, AI models are expected to be living products that evolve with users and data. Monitoring ensures reliability and longevity.

4. Prioritize Efficiency and Cost Optimization

AI models demand heavy computing resources. Without cost optimization, enterprises may run into million-dollar cloud bills. If a sentiment analysis task can be done with a smaller model at a fraction of cost, deploying a massive transformer wastes resources.

Best Practices

- Use optimized runtimes: Deploy via ONNX Runtime, TensorRT, or OpenVINO to cut inference latency.

- Distill large models: Apply knowledge distillation to train smaller yet effective student models.

- Batching and caching: If real-time predictions aren’t required, batch requests to reduce costs. Cache outputs for repeated queries.

- Edge deployment: Run inference closer to users (via edge AI chips) to reduce both cost and latency.

Why it matters: In 2026, cloud resources are expensive. AI teams that optimize compute usage save significant operational costs and win trust from businesses focused on ROI.

5. Strengthen Security and Compliance

AI models process sensitive data, financial transactions, health records, biometric scans. A single breach can lead to massive penalties under laws like GDPR, HIPAA, or India’s Data Protection Bill 2026.

Best Practices

- Encrypt data: Implement end-to-end encryption for data at rest and in transit.

- Secure endpoints: Harden exposed endpoints against adversarial attacks and input poisoning.

- Ensure fairness: Regularly audit models for bias in protected attributes like gender or race.

- Comply with regulations: Stay updated with evolving global compliance standards.

Why it matters: Secure and fair deployment is non-negotiable in 2026. Ethical AI isn’t just good practice, it is now a regulatory requirement.

6. Automate Deployment with MLOps

In 2026, MLOps is to AI what DevOps is to software. Building automation into the AI lifecycle avoids manual errors and accelerates releases.

Best Practices

- CI/CD pipelines: Use GitHub Actions, Jenkins, or GitLab CI to automatically build, test, and deploy models.

- Data pipelines: Automate data ingestion, cleaning, and labeling. Tools like Airflow integrate data with deployment pipelines.

- Model registries: Store and manage production-grade models in MLflow registry or SageMaker model registry.

- Feedback loop integration: Ensure real-world results flow back into the training pipeline.

Why it matters: Automating deployment shortens the time from experiment to production, making AI cycles faster and business-ready.

7. Build Human-in-the-Loop Systems

Not every decision should be blindly trusted to AI, especially in critical areas like healthcare, law, or finance. Human oversight ensures accountability.

Best Practices

- Confidence thresholds: Send only high-confidence predictions to automation, escalate uncertain cases to humans.

- Explainability tools: Use SHAP or LIME to give humans insight into model reasoning.

- Review dashboards: Give human operators interfaces to accept, reject, or refine AI decisions.

- Gradual automation: Deploy AI in tandem with human experts, increasing autonomy only with proven reliability.

Why it matters: Human-in-the-loop systems make AI deployments trustworthy, safe, and ethically robust.

8. Track Results and Communicate Through Portfolios

A crucial, often overlooked step is communicating the results of your AI deployment. In professional careers, showing how you deployed a model matters as much as results themselves. Companies look not just for coding skill but for proof of real-world impact.

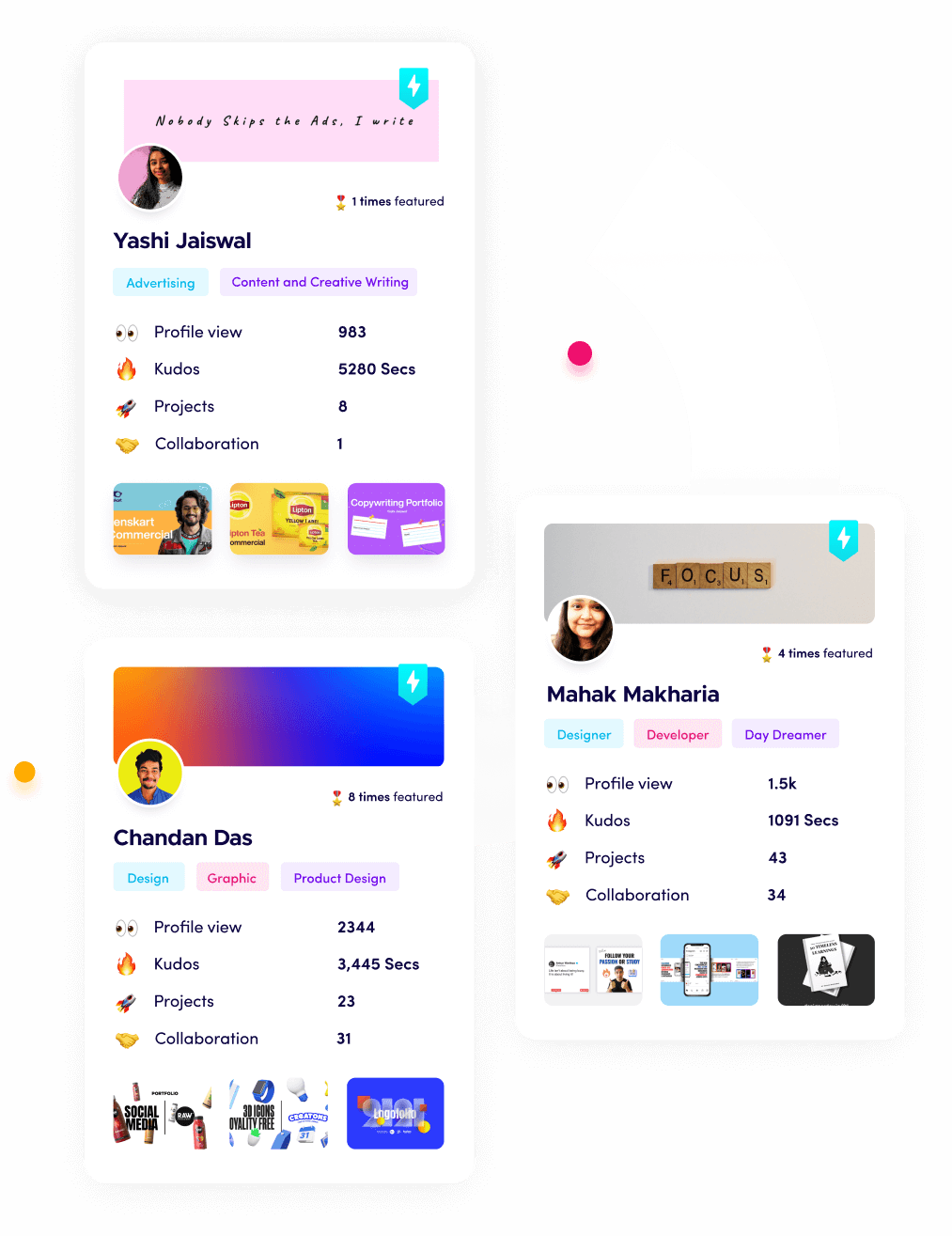

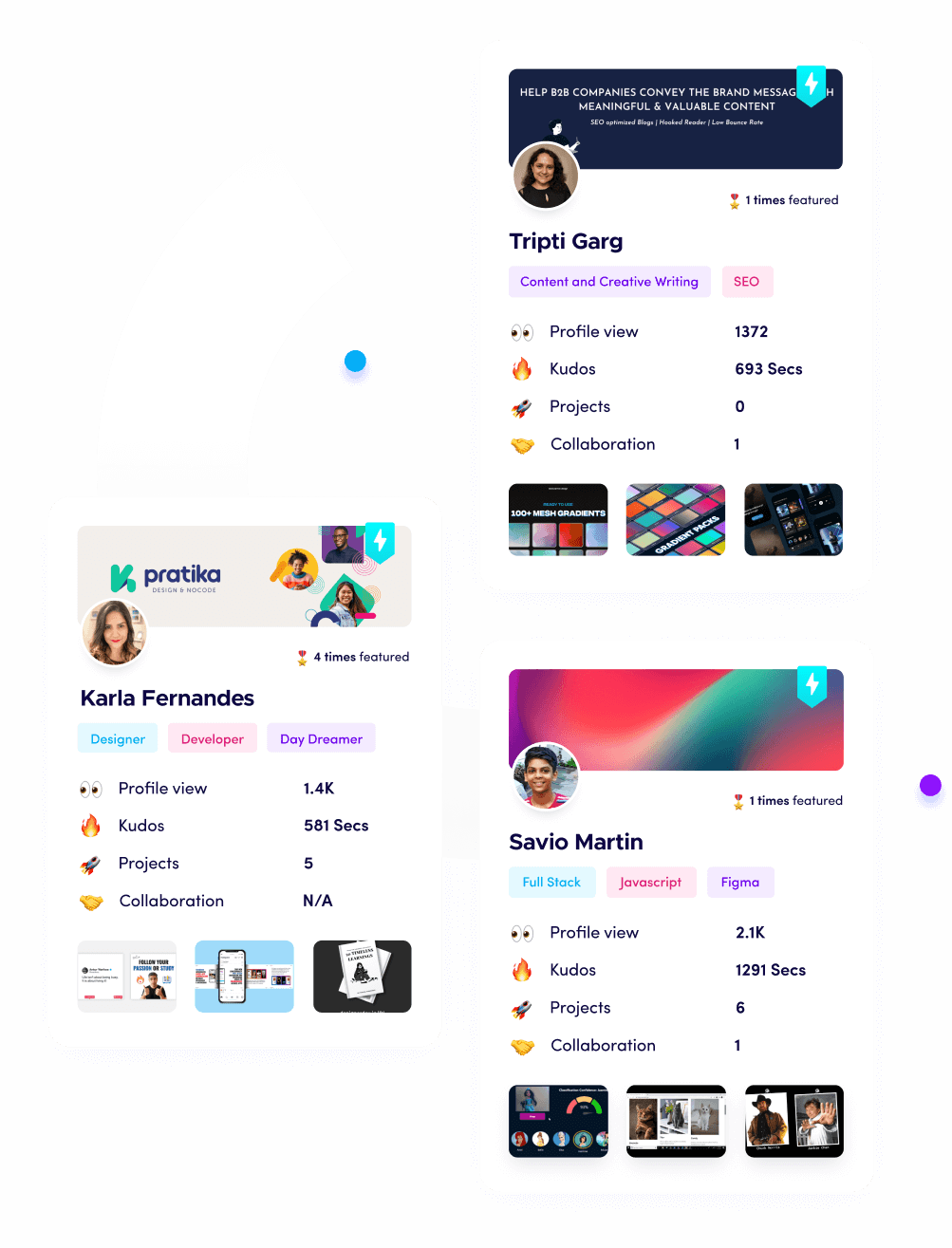

This is where platforms like Fueler play a key role. Instead of merely sharing raw code, professionals upload their case studies, deployment setups, and performance metrics. A portfolio that shows “I deployed an image recognition model using TensorFlow Serving and Kubernetes, monitored with Evidently AI, and scaled to 50,000 users” speaks volumes more than a GitHub repo.

Why it matters: AI portfolios separate learners from professionals. In 2026, employers hire on proof, not promises. Deployment stories are how you stand out.

Final Thoughts

By 2026, deploying AI models requires more than just technical coding skills. It demands reproducibility, scalability, monitoring, cost-efficiency, automation, compliance, and continuous oversight. This is what transforms models from academic exercises to reliable systems that businesses trust and invest in.

But remember, it is not just about building models. It is about showing the impact of those models in the real world. The AI professionals who thrive in 2026 are those who can deploy effectively and also communicate deployments convincingly through polished portfolios.

FAQs

1. What are the best AI model deployment tools in 2026?

Some of the best tools include TensorFlow Serving, TorchServe, Nvidia Triton Inference Server, Docker, Kubernetes with Kubeflow, and AWS SageMaker.

2. How do I deploy a machine learning model for free?

Free options include using Google Colab for prototyping, Hugging Face Spaces with Gradio for demos, and limited free tiers on Heroku, Render, or AWS Lambda.

3. What is MLOps and why is it important for deployment?

MLOps is the set of practices that automate the ML lifecycle, from data to model serving. It ensures reliability, speed, and consistency when moving models to production.

4. How to deploy AI models on edge devices in 2026?

Use lightweight frameworks like TensorFlow Lite, PyTorch Mobile, or ONNX Runtime Mobile. These are optimized for smartphones, IoT devices, and embedded systems.

5. What are the biggest risks of AI deployment?

Key risks include model drift, lack of scalability, high compute costs, security breaches, and biased predictions. Without addressing these, AI systems fail in the real world.

What is Fueler Portfolio?

Fueler is a career portfolio platform that helps companies find the best talent for their organization based on their proof of work. You can create your portfolio on Fueler, thousands of freelancers around the world use Fueler to create their professional-looking portfolios and become financially independent. Discover inspiration for your portfolio

Sign up for free on Fueler or get in touch to learn more.