AI in University Admissions: Fairness and Bias in 2025

Riten Debnath

31 May, 2025

What if your college admission didn’t depend on luck, connections, or hidden bias, but on a system that’s truly fair, transparent, and data-driven? In 2025, universities are using artificial intelligence to make admissions more objective and inclusive—but they’re also learning that fairness in AI is a challenge that requires constant vigilance.

I’m Riten, founder of Fueler—a platform that helps professionals and freelancers get hired through their work samples. In this article, I’ll walk you through how AI is shaping university admissions in 2025, focusing on fairness and bias. Just as a strong portfolio proves a candidate’s abilities, universities must prove their admissions processes are fair, unbiased, and trustworthy—because in the end, credibility is everything.

The Transformation of Admissions with AI

AI is now central to admissions at leading universities. These systems process thousands of applications, analyzing academic performance, extracurriculars, essays, and recommendations. The goal is to reduce human bias and make decisions based on consistent, data-driven criteria.

- AI can review applications faster and more consistently than human committees, reducing errors and fatigue.

- Algorithms are trained to recognize a wide range of talents, backgrounds, and achievements, not just test scores.

- Universities use AI to streamline the admissions process, improve transparency, and offer faster decisions to applicants.

How AI Admissions Systems Work

Modern AI admissions platforms use machine learning models trained on years of historical admissions data. These models are designed to identify qualities that predict student success and match applicants to the university’s goals.

- AI analyzes quantitative data (grades, test scores) and qualitative data (essays, recommendations, interviews).

- Natural language processing (NLP) tools evaluate essays for originality, clarity, and alignment with institutional values.

- The best systems include explainable AI, so admissions officers can see why a candidate was selected or rejected and ensure fairness.

Reducing Human Bias in Admissions

One of the main promises of AI in admissions is reducing human bias—whether conscious or unconscious. AI can help universities create a more level playing field for all applicants.

- Algorithms are programmed to ignore irrelevant factors like race, gender, or socioeconomic status, focusing on merit and potential.

- AI systems are regularly audited for fairness, with adjustments made to eliminate any detected bias.

- Universities use blind review processes, where personal identifiers are removed from applications before AI analysis.

The Challenge of Algorithmic Bias

While AI can reduce some forms of bias, it can also introduce new ones if not carefully managed. In 2025, universities are investing heavily in fairness audits and ethical oversight.

- AI models can inherit bias from historical data, especially if past admissions favored certain groups.

- Regular audits and third-party reviews are essential to identify and correct algorithmic bias.

- Universities are transparent about their use of AI, publishing reports on fairness, outcomes, and any corrective actions taken.

Ensuring Transparency and Accountability

Transparency is critical for building trust in AI-powered admissions. Universities must show applicants, families, and regulators that their systems are fair and accountable.

- Explainable AI tools allow admissions officers to review and justify every decision.

- Applicants can request feedback on their application, understanding how decisions were made.

- Universities publish annual reports on admissions outcomes, diversity, and fairness metrics.

AI and Holistic Admissions

While AI can process data at scale, universities still value holistic admissions—considering the full range of an applicant’s experiences and potential.

- AI is used to shortlist candidates, but final decisions often involve human review panels.

- Universities combine AI insights with interviews, portfolio reviews, and other qualitative assessments.

- This hybrid approach blends the speed and consistency of AI with the empathy and judgment of experienced admissions staff.

Legal, Ethical, and Social Consideration

AI in admissions raises important legal and ethical questions. Universities must comply with anti-discrimination laws, data privacy regulations, and ethical standards.

- Legal teams review AI models to ensure compliance with local and national regulations.

- Ethical committees oversee the design, deployment, and monitoring of AI admissions systems.

- Universities engage with students, families, and advocacy groups to address concerns and improve their processes.

The Future of Fair Admissions

Looking forward, AI will continue to evolve, offering new tools for fairness, personalization, and efficiency. The challenge will be to balance automation with human oversight and to constantly test for unintended bias.

- Universities will invest in more advanced fairness algorithms, explainable AI, and regular audits.

- The admissions process will become more personalized, with AI recommending programs, scholarships, and support services tailored to each applicant’s needs.

- Transparency and accountability will remain top priorities, ensuring that every applicant gets a fair shot.

Final Thought

AI is transforming university admissions in 2025, making the process faster, more transparent, and potentially more fair. But true fairness requires constant vigilance, ethical oversight, and a commitment to transparency. The universities that lead will be those that combine advanced technology with a culture of trust, accountability, and continuous improvement—proving their fairness with real data, just like a strong portfolio.

FAQs

1. How do universities use AI in admissions in 2025?

Universities use AI to analyze applications, reduce human bias, and make faster, more consistent admissions decisions.

2. What are the risks of bias in AI-powered admissions?

AI can inherit bias from historical data, so universities must regularly audit and update their models to prevent discrimination.

3. How do universities ensure transparency in AI admissions?

They use explainable AI, publish fairness reports, and allow applicants to request feedback on decisions.

4. Are final admissions decisions made by AI alone?

No. Most universities use AI to shortlist candidates, but final decisions often involve human review panels for holistic assessment.

5. How can universities prove their admissions process is fair?

By publishing data on outcomes, conducting regular fairness audits, and being transparent about their AI systems—similar to how Fueler helps professionals prove their skills with real work samples.

What is Fueler Portfolio?

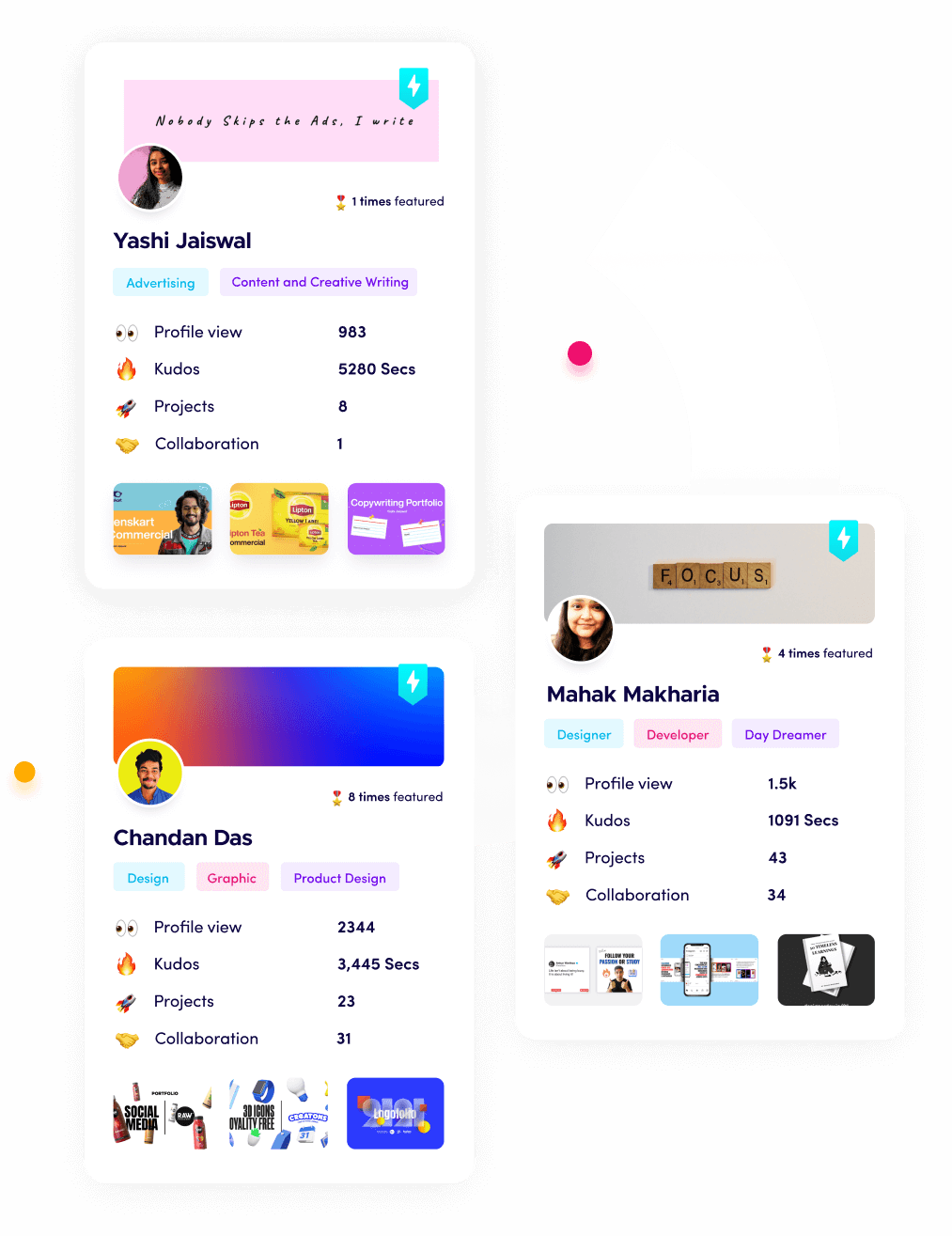

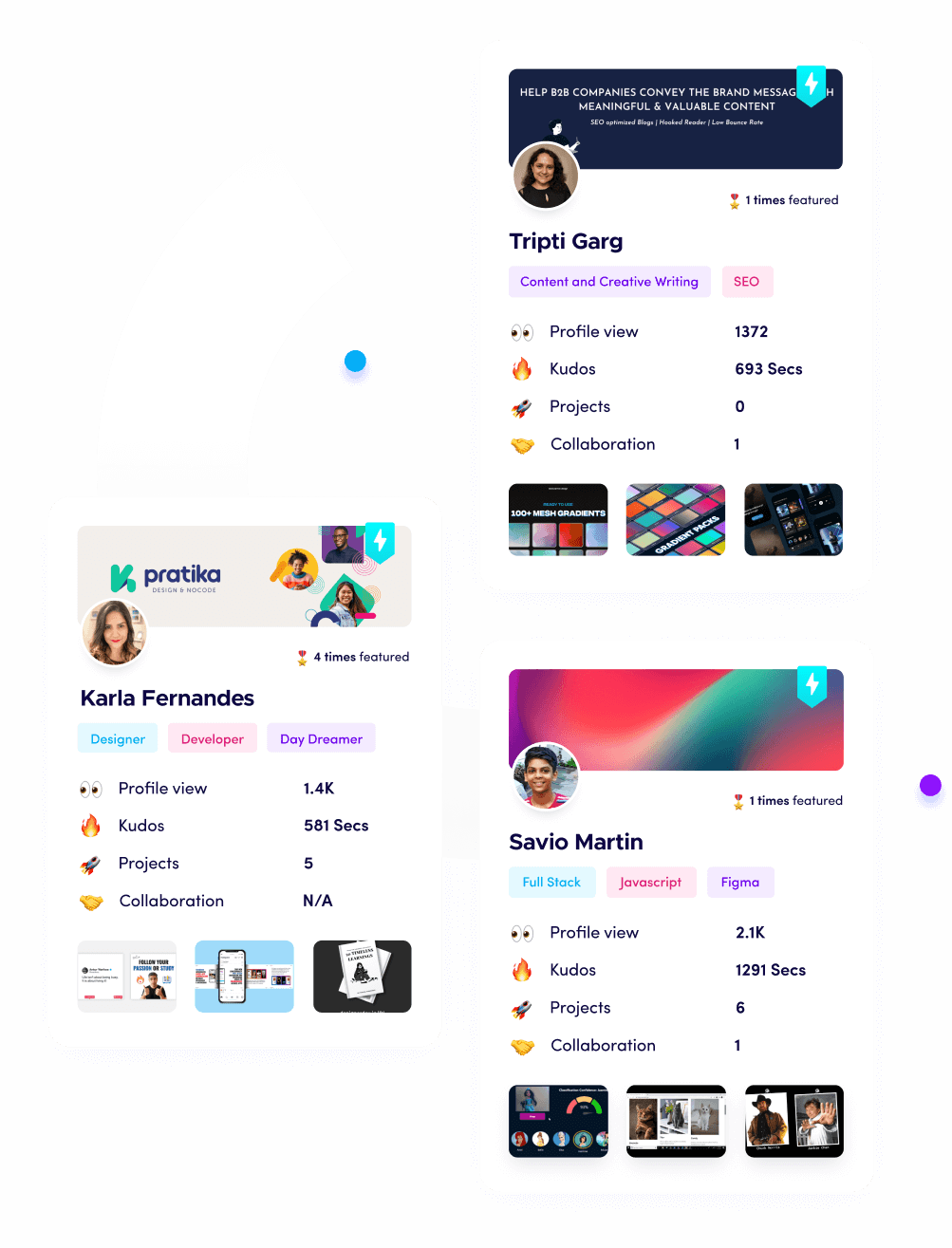

Fueler is a career portfolio platform that helps companies find the best talents for their organization based on their proof of work.

You can create your portfolio on Fueler, thousands of freelancers around the world use Fueler to create their professional-looking portfolios and become financially independent. Discover inspiration for your portfolio

Sign up for free on Fueler or get in touch to learn more.