AI Governance Frameworks: Best Practices for 2026

Riten Debnath

12 Oct, 2025

As Artificial Intelligence becomes integral to business operations and decision-making, effective AI governance has emerged as a strategic imperative in 2026. AI governance frameworks provide the structure, policies, and standards organizations need to manage AI risks, ensure ethical use, maintain regulatory compliance, and maximize AI’s value responsibly. Without a well-designed governance system, companies face serious challenges, including bias, lack of transparency, security vulnerabilities, and legal repercussions. Establishing robust governance is key to building trust with stakeholders, steering AI initiatives safely, and sustaining competitive advantage in a complex regulatory landscape.

I’m Riten, founder of Fueler, a platform that helps freelancers and professionals get hired through their work samples. This article presents best practices, practical steps, and examples of building strong AI governance frameworks in organizations today, empowering leaders to navigate the opportunities and challenges AI presents confidently.

Understand the Foundations of AI Governance

Before adopting or refining AI governance frameworks, leaders must comprehend the core components shaping responsible AI management.

- Policy and Standards Development: Setting clear policies on AI ethics, data privacy, model transparency, and risk management that align with organizational values and industry regulations.

- Roles and Responsibilities: Defining accountable executives, data scientists, compliance officers, and cross-functional committees ensures ownership of AI outcomes and risk mitigation.

- Risk Assessment and Management: Continuously evaluating potential AI risks such as bias, errors, security breaches, and operational failures to implement proactive controls.

- Transparency and Explainability: Mandating clear documentation and explainability of AI models and decision processes to ensure stakeholder understanding and trust.

- Compliance and Auditing: Establishing mechanisms to monitor adherence to internal policies and external regulations, supported by regular audits and reporting.

Why it matters: These foundational elements create a structured approach that balances innovation with control, fostering accountability and resilience.

Best Practice 1: Establish Multidisciplinary AI Governance Bodies

Effective AI governance relies on collaboration across legal, compliance, technical, business, and ethical domains.

- Form AI steering committees or ethics boards with representatives from diverse disciplines to review AI projects, policies, and risks.

- Ensure these groups have clear authority to approve AI deployments, set standards, and intervene when ethical or legal concerns arise.

- Regularly update governance bodies on emerging AI technologies, regulatory changes, and organizational challenges to maintain relevance and effectiveness.

- Promote an open culture where concerns can be raised safely, encouraging transparency and ethical vigilance across teams.

Why it matters: Multidisciplinary oversight enables balanced decision-making that incorporates technical feasibility, ethical considerations, and business objectives.

Best Practice 2: Implement Comprehensive Risk Management Frameworks

Proactively identifying and managing AI-related risks reduces negative impacts and legal exposure while enhancing outcomes.

- Develop standardized processes for assessing risks throughout the AI lifecycle—from data collection and model training to deployment and monitoring.

- Use bias detection tools, fairness audits, and scenario testing to surface and mitigate potential discriminatory or erroneous behaviors.

- Assess cybersecurity vulnerabilities specific to AI systems, ensuring data integrity, model robustness, and resistance to adversarial attacks.

- Create incident response plans and escalation protocols for AI failures or ethical breaches, minimizing harm and restoring trust swiftly.

Why it matters: Systematic risk management transforms AI governance from reactive compliance to proactive stewardship, improving resilience and trustworthiness.

Best Practice 3: Prioritize Transparency and Explainability

Clear communication about how AI systems function and make decisions is essential to stakeholder trust and regulatory compliance.

- Document AI models’ development processes, data sources, assumptions, limitations, and performance metrics in accessible formats.

- Choose or adapt AI algorithms to balance predictive accuracy with interpretability suitable for the application context (e.g., highly regulated or customer-facing uses).

- Deliver explainable AI outputs to end-users and affected parties, enabling them to understand, contest, or appeal decisions as necessary.

- Educate internal stakeholders on AI capabilities and risks to support informed oversight and ethical use.

Why it matters: Transparency combats the “black box” problem, fostering accountability and user confidence while meeting growing regulatory demands.

Best Practice 4: Embed Data Privacy and Security Protections

Protecting personal data and safeguarding AI systems against threats are foundational to responsible AI governance.

- Enforce data governance policies ensuring minimal data collection, secure storage, and controlled access aligned with privacy regulations (GDPR, CCPA, etc.).

- Apply data anonymization, encryption, and consent management techniques throughout AI workflows.

- Regularly audit AI systems for security vulnerabilities, patch known issues, and implement robust defenses against adversarial inputs or data poisoning.

- Train staff on data privacy principles and security best practices to reduce human error risks.

Why it matters: Ensuring privacy and security maintains regulatory compliance, protects reputation, and preserves customer and stakeholder trust.

Best Practice 5: Foster a Culture of Ethical AI and Continuous Learning

Technical controls alone can’t ensure responsible AI ethical awareness and ongoing education throughout the organization are equally vital.

- Embed AI ethics training for all employees, from developers to executives, emphasizing principles of fairness, accountability, and respect for human rights.

- Encourage reporting and open discussion of ethical dilemmas, biases, or unintended AI consequences.

- Monitor emerging AI ethical issues, academic research, and industry debates to adapt governance frameworks proactively.

- Incentivize ethical innovation and recognize teams that uphold AI responsibility and transparency as core values.

Why it matters: A values-driven culture empowers people to uphold AI ethics in practice, preventing harm and fostering sustainable innovation.

How Fueler Can Help

For executives and AI leaders, Fueler provides a platform to showcase your governance frameworks, ethical AI initiatives, and compliance achievements. Demonstrating your commitment to responsible AI strengthens credibility with customers, partners, and regulators, unlocking new opportunities and trust in your AI-driven enterprise.

Final Thoughts

In 2026, AI governance frameworks are no longer optional—they are essential enablers of successful, ethical, and compliant AI adoption. By building robust multidisciplinary governance bodies, comprehensive risk management, transparency, privacy protections, and a culture of ethics, organizations can unlock AI’s transformative potential safely and sustainably. Strong governance systems nurture trust, drive innovation, and position businesses as responsible leaders in the AI-powered future.

FAQs

1. What is the purpose of an AI governance framework?

To provide structured policies, roles, and processes that ensure AI is used ethically, responsibly, and compliantly across the organization.

2. Who should be involved in AI governance?

A multidisciplinary team including legal, compliance, data science, business leaders, and ethics experts ensures balanced oversight.

3. How does AI risk management contribute to governance?

It proactively identifies, assesses, and mitigates risks like bias, security threats, and operational failures to protect stakeholders.

4. Why is transparency important in AI systems?

Transparency builds trust, enables accountability, and helps meet regulatory requirements by explaining AI decisions.

5. How can organizations promote a culture of ethical AI?

Through training, open dialogue on ethical issues, continuous learning, and recognition of responsible AI practices.

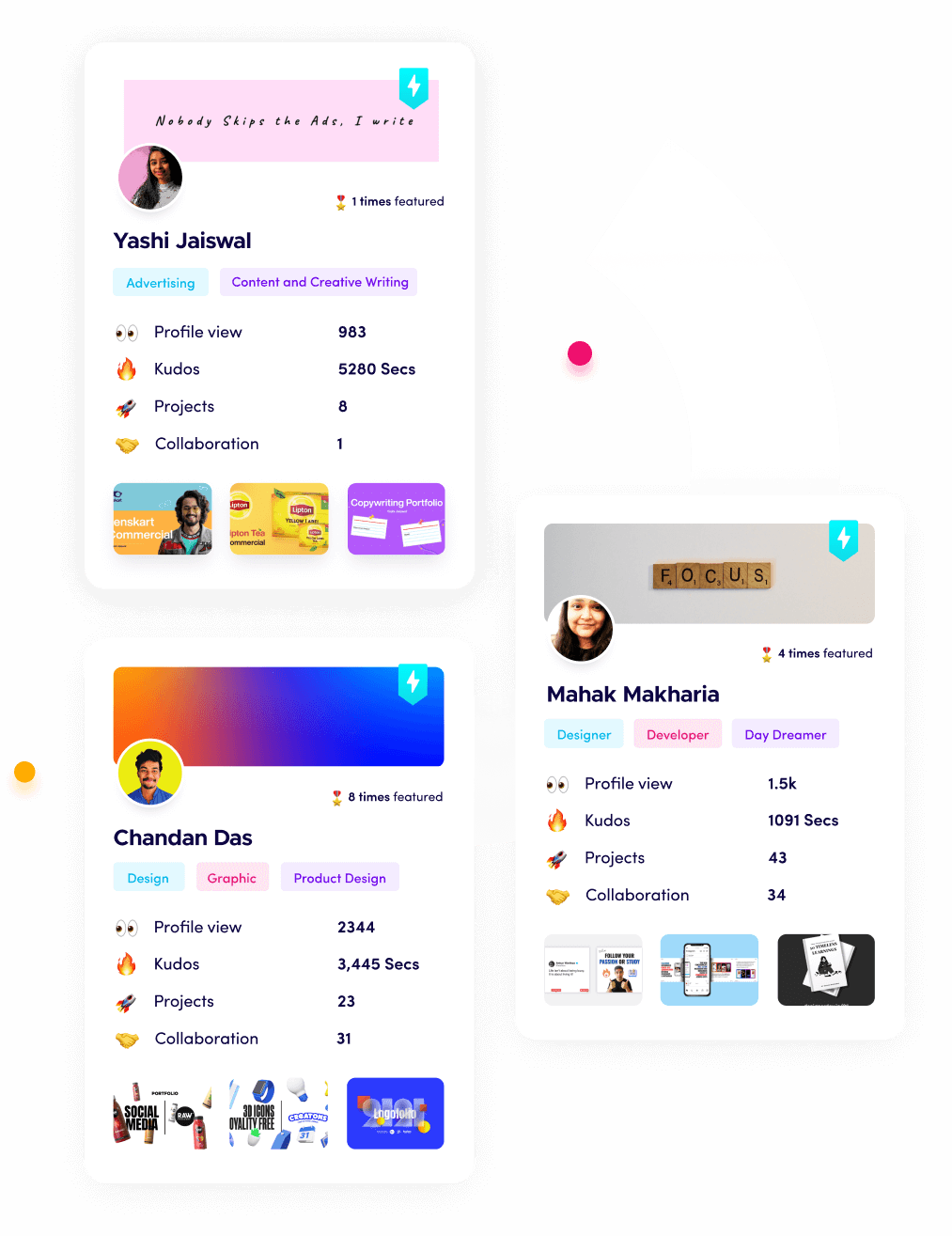

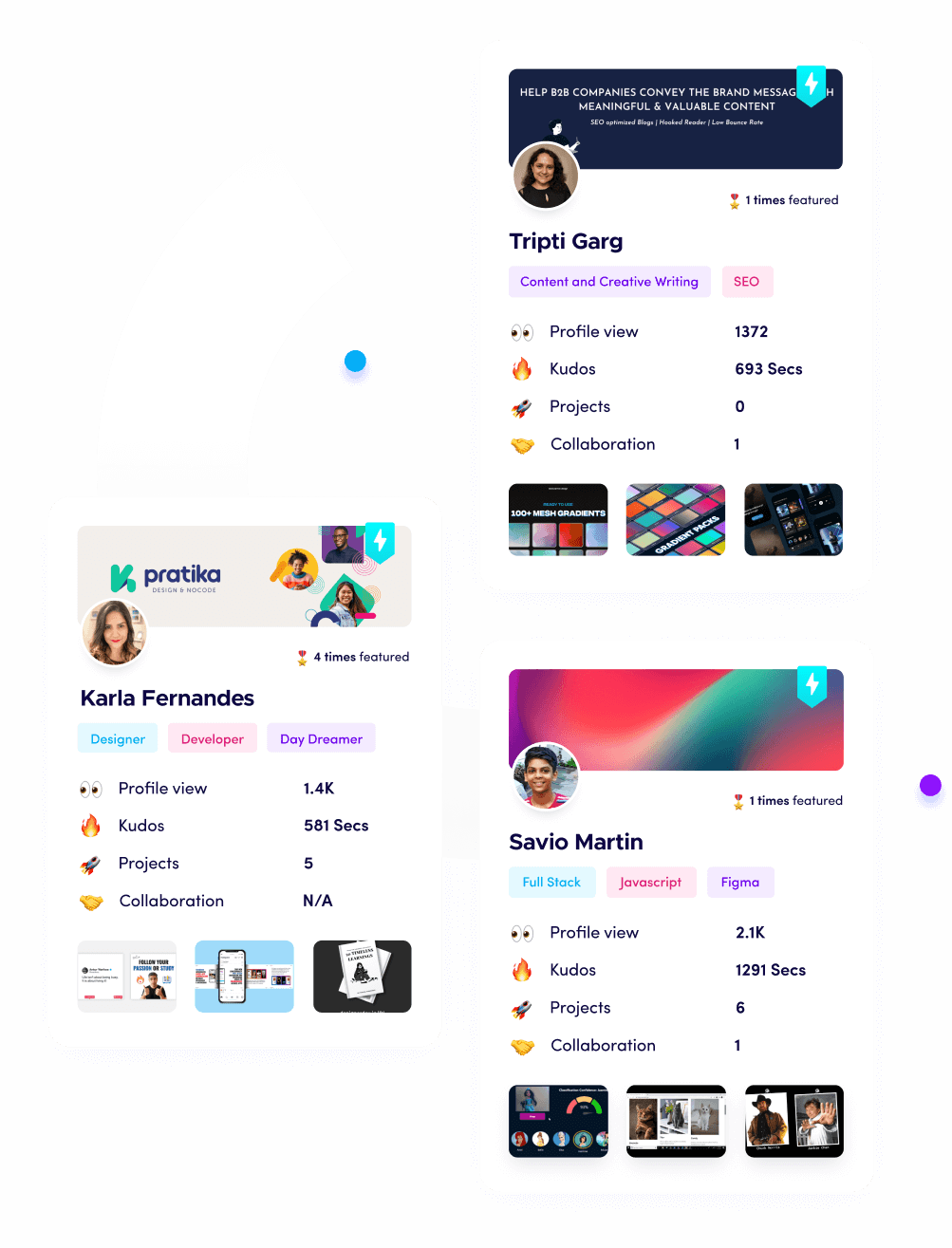

What is Fueler Portfolio?

Fueler is a career portfolio platform that helps companies find the best talent for their organization based on their proof of work. You can create your portfolio on Fueler, thousands of freelancers around the world use Fueler to create their professional-looking portfolios and become financially independent. Discover inspiration for your portfolio

Sign up for free on Fueler or get in touch to learn more.